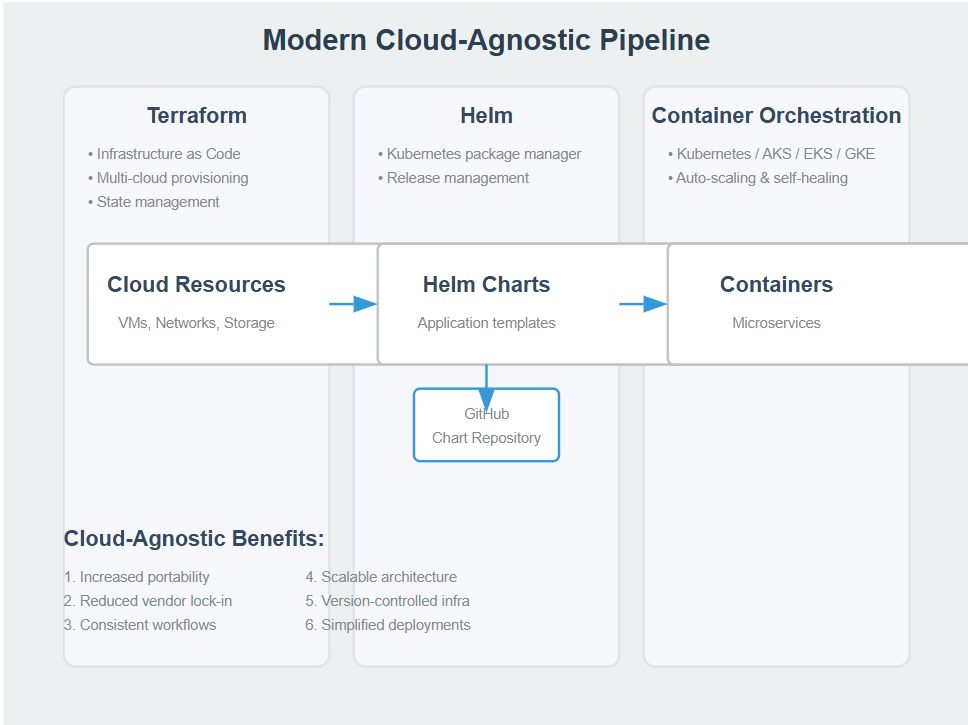

Cloud Agnostic CI/CD Pipeline and Environment

In today's world, having a portable environment with a proper version control is key to have a healthy production environment and setup. In this article, we will be discussing the advantages of cloud agnostic design and how it can be implemented using Terraform, Helm and Kubernetes.

NOTE = Agnosticism is the belief that the existence of God or the divine is unknown or unknowable. It holds that it's impossible to prove or disprove the existence of deities.

Let's say your company uses different services of a cloud provider of their preference, and the cloud team setup a production environment with the services and configurations using the cloud specific solutions. What happens when the company decides to change the cloud environment and use completely different environment instead?

Let's think, let's say you used Azure Container Applications to serve your applications and used Cosmos DB or similar services to provide storage to your setup. What are the corresponding services in AWS of those? Maybe, S3 and ECS (Elastic Container Service) for serving applications, and DynamoDB for a NoSQL database similar to Cosmos DB.

The cloud specific services considered, you will need to re-apply the logic to a completely different cloud environment, in this case Azure to AWS.

Avoiding Waste with Portable Design

What could be the logical approach to avoid such a waste. You may be thinking the answer: Hmm, why not using a service that works on all cloud providers and can be configured with code to keep the infra and version states wherever we want them to be.

Then let's use VMs, that's included in all CPs and we can simply deploy applications with Ansible like tools. Hmm, good try. Then what happens, when the VM cannot handle the load YES scaling needed. Does VMs have scaling, actually YES, however personally I don't trust any VMs :).

Using VMs can be an option, but there’s a big question: how do they handle service discovery when they scale? To manage this, you'd need a service discovery tool like Consul from HashiCorp. But here’s the catch: setting up and managing such tools can get pretty complex and require a lot of engineering effort.

As a company owner, I’d be wary of diving into a setup that’s both intricate and challenging to manage. Why? Because while a more complex environment might offer flexibility, it can also consume a lot of engineering resources. On the flip side, a simpler setup might lead to higher operational costs. Balancing complexity and cost is key to maintaining a stable and efficient infrastructure.

Cloud Agnostic Environment

What? I thought we were building a CI/CD pipeline.

Yes, we are. However, to create a cloud-agnostic CI/CD pipeline, you also need to make your environment agnostic to specific cloud services. This will become more apparent when we get to the CD (release) stage.

Hmm, what does that actually mean?

In simple terms, it means using services that are available across all cloud platforms, avoiding vendor lock-in. By adopting this approach, you can focus on application development rather than being tied down by infrastructure choices.

So what services should we use?

If we agree to avoid cloud-specific services, VMs are still a good option. The key is to use an orchestrator that continuously monitors and manages the health of the environment, ensuring it runs smoothly.

As you understood, I don't like unnecessary risks. Therefore, let's just consider Kubernetes (K8s). BUT... I didn’t say it will be cheap.

Kubernetes is a powerful orchestrator that can manage containers across different environments, making it a great fit for cloud-agnostic strategies.

However, the trade-off here is cost—both in terms of infrastructure and the expertise needed to set it up and maintain it. You’ll need skilled engineers to manage Kubernetes clusters, ensure scaling, handle updates, and troubleshoot issues.

The upside? Once it's set up, Kubernetes will give you the flexibility to move between cloud providers with minimal hassle, avoiding vendor lock-in and making your infrastructure more resilient in the long run. It’s an investment in reliability and futureproofing, but it comes with a price tag—whether that’s in terms of resources or personnel. So while it may not be the cheapest option, it's certainly one that provides peace of mind when scaling your operations.

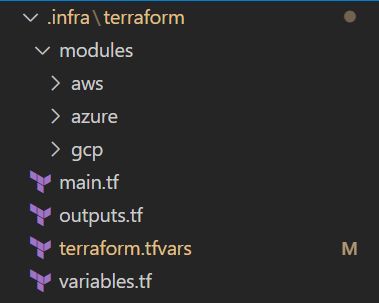

Great, now we have a portable environment that can run on any cloud provider. Hmm, I think it's time to consider how to keep the state of the infrastructure. Why don’t we use Terraform?

Terraform is a cloud-agnostic Infrastructure as Code (IaC) tool that allows you to define and manage your infrastructure across multiple cloud providers using a single configuration language. By using Terraform, you can maintain a consistent and version-controlled state of your infrastructure, automate provisioning and updates, and ensure that your setup is reproducible and maintainable. This aligns perfectly with our goal of a cloud-agnostic setup, providing flexibility and control over your infrastructure while avoiding vendor lock-in.

KubernetesInfra Github Repository

The main.tf file is the entry point of the terraform apply command:

# main.tf file

module "kubernetes" {

source = "./modules/azure"

resource_group_name = var.resource_group_name

kubernetes_cluster_name = var.kubernetes_cluster_name

location = var.location

node_count = var.node_count

vm_size = var.vm_size

ARM_CLIENT_ID = var.ARM_CLIENT_ID

ARM_CLIENT_SECRET = var.ARM_CLIENT_SECRET

ARM_TENANT_ID = var.ARM_TENANT_ID

ARM_SUBSCRIPTION_ID = var.ARM_SUBSCRIPTION_ID

}

However, this setup demonstrates the flexibility of a cloud-agnostic environment. The core idea is that by abstracting infrastructure through Terraform modules and variables, you can easily switch to any other cloud provider.

The specific module for Azure can be replaced or configured similarly for AWS, Google Cloud, or any other cloud provider, thereby supporting a cloud-agnostic strategy. This approach ensures that your infrastructure definitions remain portable and adaptable, fitting seamlessly into different cloud environments as needed.

Azure Module Overview

Let's check the Azure module to see what resources we are creating and what logic we're after.

provider "azurerm" {

features {}

}

resource "azurerm_resource_group" "main" {}

resource "azurerm_kubernetes_cluster" "aks" {

default_node_pool {}

identity {}

}

provider "kubernetes" {}

resource "kubernetes_namespace" "monitoring" {}

resource "kubernetes_namespace" "production" {}

resource "kubernetes_namespace" "staging" {}

provider "helm" {

kubernetes {}

}

resource "helm_release" "prometheus" {}

resource "kubernetes_config_map" "alertmanager_config" {}

resource "helm_release" "database" {}

resource "helm_release" "web_app" {}

resource "helm_release" "worker" {}

resource "random_password" "grafana_admin_password" {}

Kubernetes Resources Overview

The Terraform configuration provisions:

- AKS Cluster

- Kubernetes Namespaces for

monitoring,production, andstaging - Helm Charts for Prometheus, web app, worker, and database

- Grafana Admin Password generated securely

Each namespace is logically separated, and each resource is Helm-managed for clear environment control.

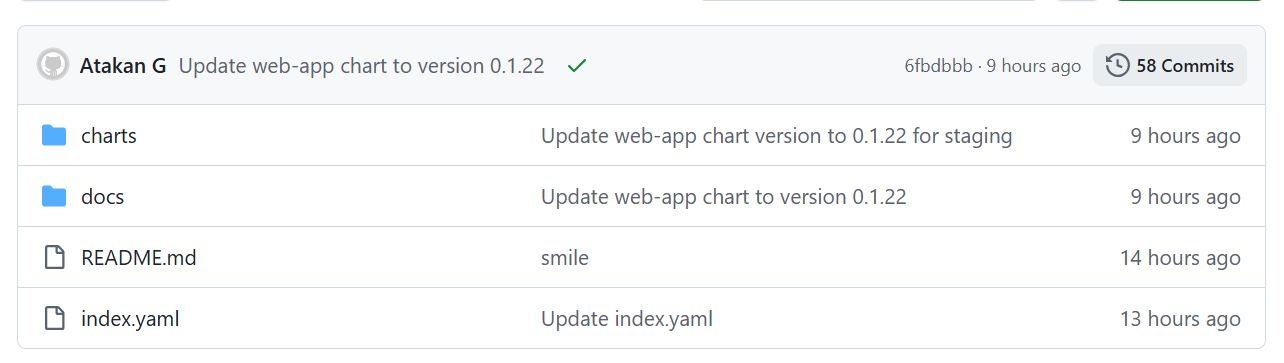

Helm for Versioning and Environment Management

GH-PAGES (Helm GitHub Repository)

This repository contains the Helm charts for all environments: production and staging.

Helm chart folders like /charts/web-app/ contain:

deployment.yamlvalues-staging.yamlvalues-production.yaml

This allows templating with variables:

image:

repository: your-repo

tag: 0.1.22

And Helm commands like:

helm upgrade --install web-app ./charts/web-app -f ./charts/web-app/values-production.yaml

helm upgrade --install web-app ./charts/web-app -f ./charts/web-app/values-staging.yaml

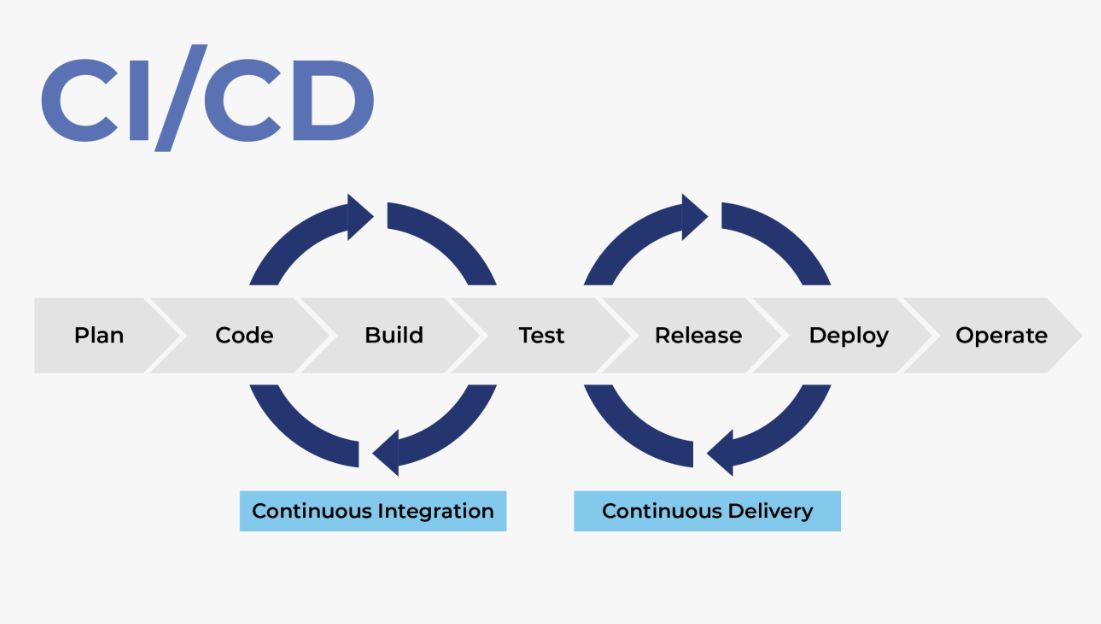

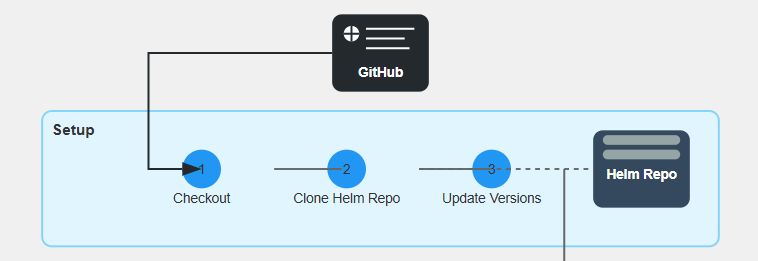

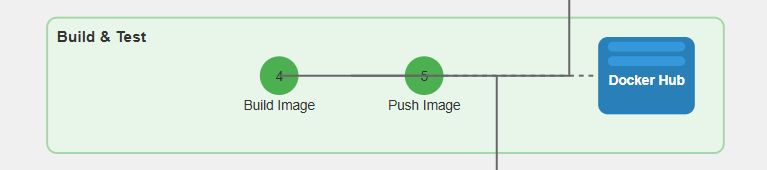

Creating Cloud Agnostic CI/CD Pipeline

A cloud-agnostic CI/CD pipeline ensures your build and deployment logic works across all providers.

Read more here: How to Setup CI/CD Pipeline Using Azure DevOps for AKS

Pipeline Configuration and Team Management

- Azure DevOps for full-scale team and repo management

- Jenkins as a free alternative with flexible plugin support

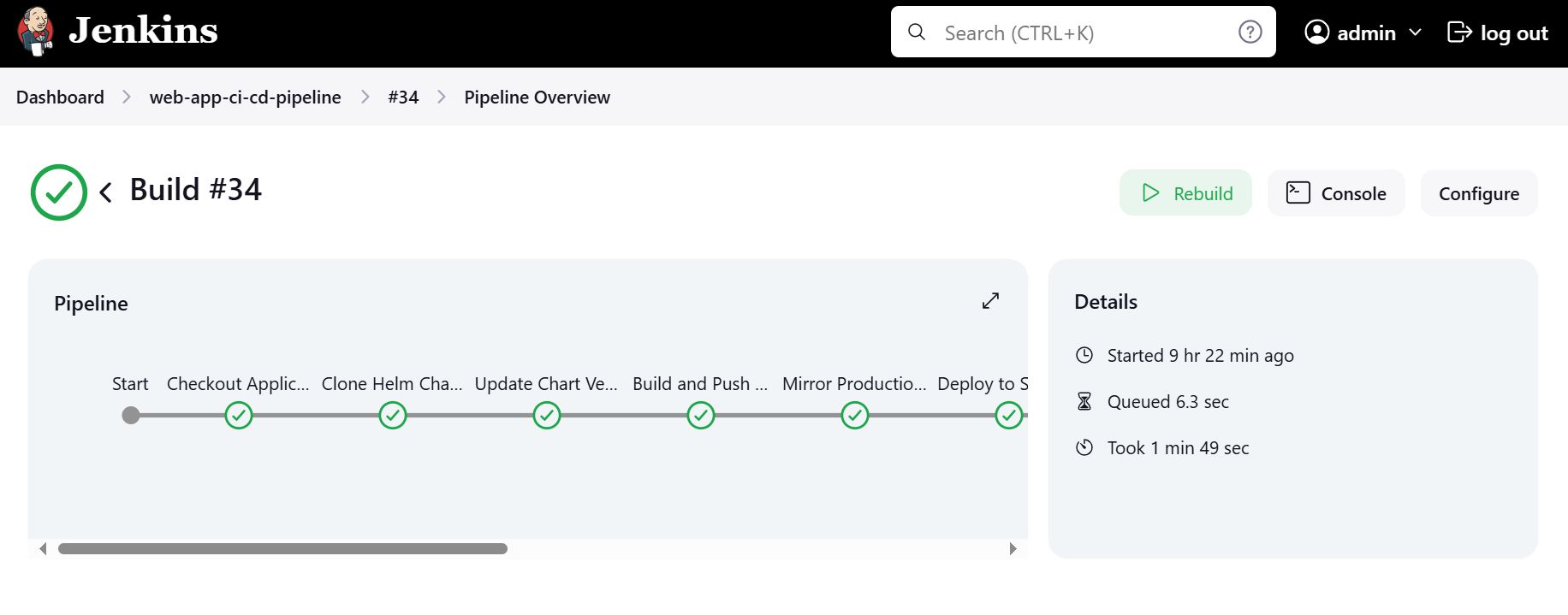

Jenkins Pipeline Stages

Tools installed on AWS free tier instance:

- Docker

- Azure CLI

kubectl- Jenkins

Stages:

- Checkout application

- Clone Helm charts repo

- Update chart versions

- Build + push Docker image

- Mirror prod → staging

- Deploy to staging

- Run tests

- Wait for manual approval

- Push updated Helm chart

- Cleanup staging

- Deploy to production

See full pipeline: KubernetesInfra/.jenkins

Conclusion

By adopting a cloud-agnostic approach, you can ensure that your infrastructure and CI/CD pipeline are flexible and adaptable to any cloud provider. This strategy avoids vendor lock-in, making it easier to scale and manage your environment efficiently.

More info:KubernetesInfra/.jenkins

This approach provides a robust, scalable solution for managing deployments and infrastructure, offering peace of mind as you scale your operations across different cloud platforms.